Follow Us On:

Deep learning has emerged as one of the most transformative technologies of the 21st century, revolutionizing industries, enabling human-like intelligence in machines, and driving innovation in everything from healthcare to autonomous vehicles. At its core, deep learning is a subset of artificial intelligence (AI) and machine learning that uses algorithms inspired by the structure and function of the human brain — artificial neural networks — to process data and learn patterns.

Unlike traditional algorithms that rely on manually engineered features, deep learning models can automatically extract relevant features from raw data. This ability to process massive amounts of unstructured data like images, text, and speech has made learning the backbone of many AI-driven applications.

The key reasons deep learning has gained such prominence in recent years include:

Abundance of data from digital devices, IoT sensors, and online activities.

Increased computational power through GPUs, TPUs, and cloud infrastructure.

Advances in algorithms and open-source deep learning frameworks.

As we move into 2025, learning continues to evolve rapidly, powering smarter AI models and pushing the boundaries of what machines can achieve.

The roots of learning date back to the 1940s, when researchers first began exploring artificial neural networks (ANNs). However, progress was slow due to limited computational resources and a lack of large datasets.

1943 – McCulloch and Pitts introduced the first conceptual model of a neural network.

1958 – Frank Rosenblatt developed the Perceptron, an early form of a neural network.

1980s – The backpropagation algorithm, popularized by Rumelhart, Hinton, and Williams, allowed neural networks to learn efficiently.

1990s – Interest in neural networks waned due to the AI winter, caused by computational limits and underwhelming results.

2006 – Geoffrey Hinton and colleagues revived the field by showing that deep neural networks could achieve impressive accuracy when pre-trained layer by layer.

2012 – AlexNet, a deep convolutional neural network, won the ImageNet competition by a huge margin, marking the modern era of deep learning.

Since then, learning has powered breakthroughs in computer vision, natural language processing (NLP), reinforcement learning, and generative AI.

Before diving deeper, let’s understand some core learning terms:

Neural Network – A computational model made of interconnected nodes (neurons) arranged in layers.

Layers – Input, hidden, and output layers through which data flows in a network.

Activation Function – Determines the output of a neuron (e.g., ReLU, sigmoid, tanh).

Loss Function – Measures the difference between predicted and actual values.

Optimization Algorithm – Methods like stochastic gradient descent (SGD) that adjust weights to minimize loss.

Overfitting – When a model memorizes training data but performs poorly on unseen data.

Regularization – Techniques like dropout or L2 regularization to prevent overfitting.

Batch Size & Epochs – Control how data is fed into the model during training.

At its heart, deep learning mimics how the human brain processes information. Here’s the process:

Data Collection – Large volumes of labeled or unlabeled data are gathered.

Data Preprocessing – Cleaning, normalization, and transformation to make it suitable for training.

Model Architecture Selection – Choosing the right type of network (e.g., CNN for images, RNN for sequences).

Forward Propagation – Data moves through the network layer by layer, producing predictions.

Loss Calculation – The difference between predicted and actual output is measured.

Backpropagation – The network calculates gradients and updates weights to minimize error.

Model Evaluation – Performance is tested on validation datasets.

Deployment – The trained model is used in production environments.

The magic of deep learning lies in its ability to learn representations of data at multiple levels of abstraction, enabling it to handle complex tasks like facial recognition or real-time language translation.

Although learning is a subset of machine learning, there are key differences:

| Feature | Machine Learning | Deep Learning |

|---|---|---|

| Feature Extraction | Manual, domain-specific | Automatic, data-driven |

| Data Requirements | Works with small to medium datasets | Requires large datasets |

| Training Time | Faster | Slower, resource-intensive |

| Performance | Plateaus with complex tasks | Excels with unstructured, complex data |

| Interpretability | More explainable | Often a “black box” |

In short, learning automates much of the feature engineering process and thrives on big data, but it comes at the cost of higher computational demands.

Deep learning encompasses multiple specialized architectures designed for different types of data.

Best for image recognition, object detection, and video processing. CNNs use convolutional layers to capture spatial patterns.

Ideal for sequential data such as time series and text. Variants like LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) address the vanishing gradient problem.

Dominating NLP tasks, transformers use self-attention mechanisms to process data in parallel, enabling models like GPT, BERT, and T5.

GANs have two competing networks — a generator and a discriminator — to create realistic synthetic data, images, or videos.

Used for unsupervised learning, dimensionality reduction, and anomaly detection.

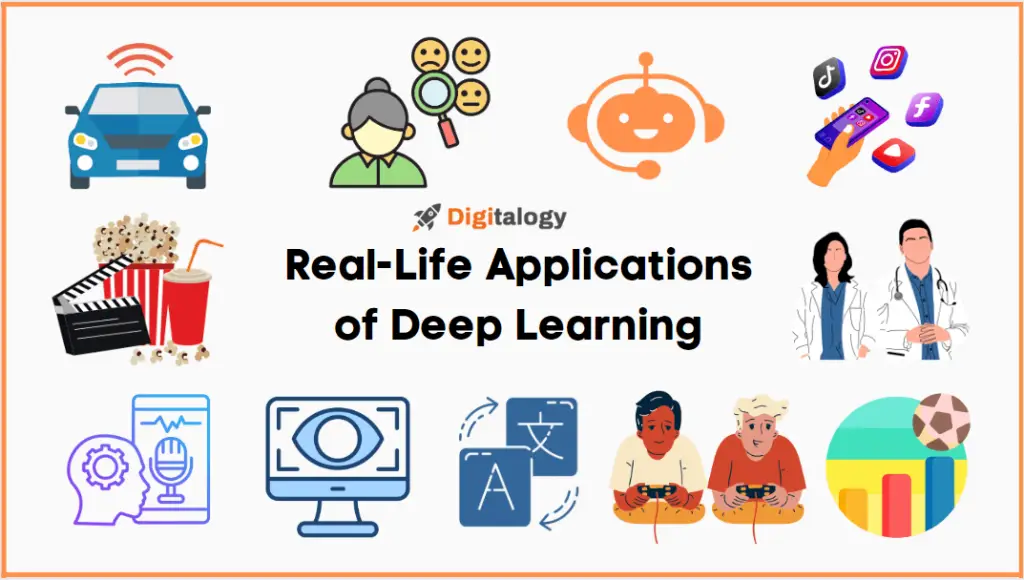

Deep learning is transforming nearly every sector:

Healthcare – Medical imaging analysis, disease prediction, drug discovery.

Automotive – Self-driving car perception systems.

Finance – Fraud detection, algorithmic trading, credit scoring.

Retail – Personalized recommendations, demand forecasting.

Manufacturing – Predictive maintenance, quality control.

Entertainment – Content recommendation, deepfake generation.

Agriculture – Crop monitoring, pest detection.

Cybersecurity – Threat detection, malware classification.

Popular learning frameworks include:

TensorFlow – Google’s scalable, flexible library.

PyTorch – Favored for research due to dynamic computation graphs.

Keras – High-level API for quick prototyping.

MXNet, Caffe, Theano – Other specialized libraries.

Cloud platforms like AWS SageMaker, Google Cloud AI, and Azure Machine Learning offer managed services for training and deployment.

Despite its power, deep learning faces hurdles:

Data Hunger – Needs vast amounts of high-quality labeled data.

Computational Cost – Training large models can be expensive.

Interpretability – Hard to explain decision-making.

Bias and Fairness – Models can inherit biases from data.

Security Risks – Vulnerable to adversarial attacks.

To ensure successful deep learning projects:

Start with clear problem definitions.

Collect diverse, representative datasets.

Use transfer learning to save time and resources.

Monitor for overfitting and apply regularization.

Test on real-world scenarios.

Continuously update and retrain models.

As of 2025, these trends are shaping the field:

Smaller, more efficient models for edge devices.

Foundation models trained on massive multimodal datasets.

Explainable AI (XAI) to improve trust.

Federated learning for privacy-preserving model training.

Neurosymbolic AI blending deep learning with reasoning.

Deep learning has evolved from an academic curiosity to a powerhouse technology reshaping the world. With the ability to learn from vast, complex datasets, deep learning is at the heart of AI breakthroughs in vision, language, and decision-making.

However, deep learning is not a magic bullet — it comes with challenges that must be addressed responsibly. Organizations that combine deep learning expertise with ethical practices will be best positioned to lead in the AI-driven future.

In 2025 and beyond, as computational power continues to grow and data becomes more abundant, deep learning will only become more pervasive, opening possibilities that today might seem like science fiction.